AI Systems Engineering and Reliability Technologies

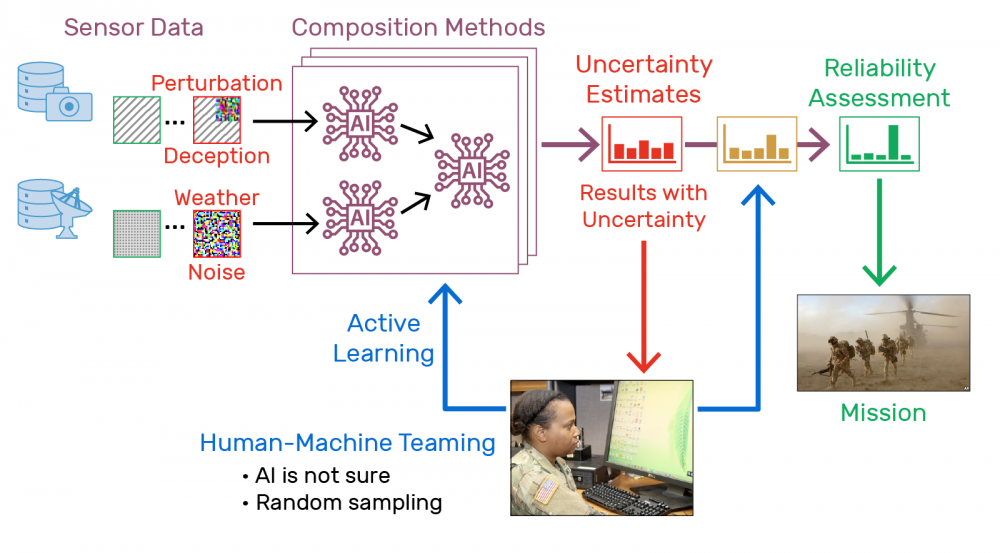

Despite the success of artificial intelligence (AI) for many applications, deep neural networks — the main drivers behind modern AI — are known to break down when facing either intentional attempts to trick AI systems or naturally arising challenges, such as inputs that are noisy or different from the training data. These vulnerabilities make it difficult to validate and deploy AI systems for national security missions, which often have strict safety, legal, and ethical requirements. In the AI Systems Engineering and Reliability Technologies (ASERT) project, we aim to accelerate the development and deployment of mission-ready AI systems to help servicemembers who are conducting repetitive, dangerous, or difficult tasks.

The ASERT project team is developing scalable tools and techniques for enhancing, evaluating, and monitoring the reliability of AI systems to achieve the desired end-to-end mission performance. For example, we improve overall system resilience by combining multiple components, such as classifiers, detectors, and humans, to leverage their complementary strengths. Additionally, we employ uncertainty estimation techniques to increase the reliability of confidence scores reported by the system. Confidence scores are critical for helping users decide when to trust system outputs.

We are collaborating with the USAF-MIT AI Accelerator’s Robust AI Development Environment project on an open-source toolbox that supports configurable, repeatable, and scalable AI research and development. ASERT is using the toolbox to investigate new techniques, such as diagnostics for better understanding of model behavior, and to analyze and enhance existing AI models and systems, such as models that use overhead imagery to conduct assessments of damage to buildings.