Integrated Physiological Sensors Platform

With our Integrated Sensors Platform, software developers can collect, fuse, and analyze data from multiple physiological sensors to inform evaluations of users' performance with the software. The physio-behavioral responses of users as they interact with the software can provide developers with a quantitative assessment of the capabilities and efficacy of software tools for complex tasks like air traffic control and cybersecurity.

Motivation

In developing software applications, designers work with potential users to assess users' success with new software tools. Designers evaluate a tool's utility through interviews with beta testers, observations of user interactions with the software, and measured outcomes (e.g., time spent in completing a task, accuracy of the completed assignment). The data from such qualitative assessments, while insightful, are subjectively interpreted through the users' perspective or the presumptions of the observers.

Lincoln Laboratory Concept

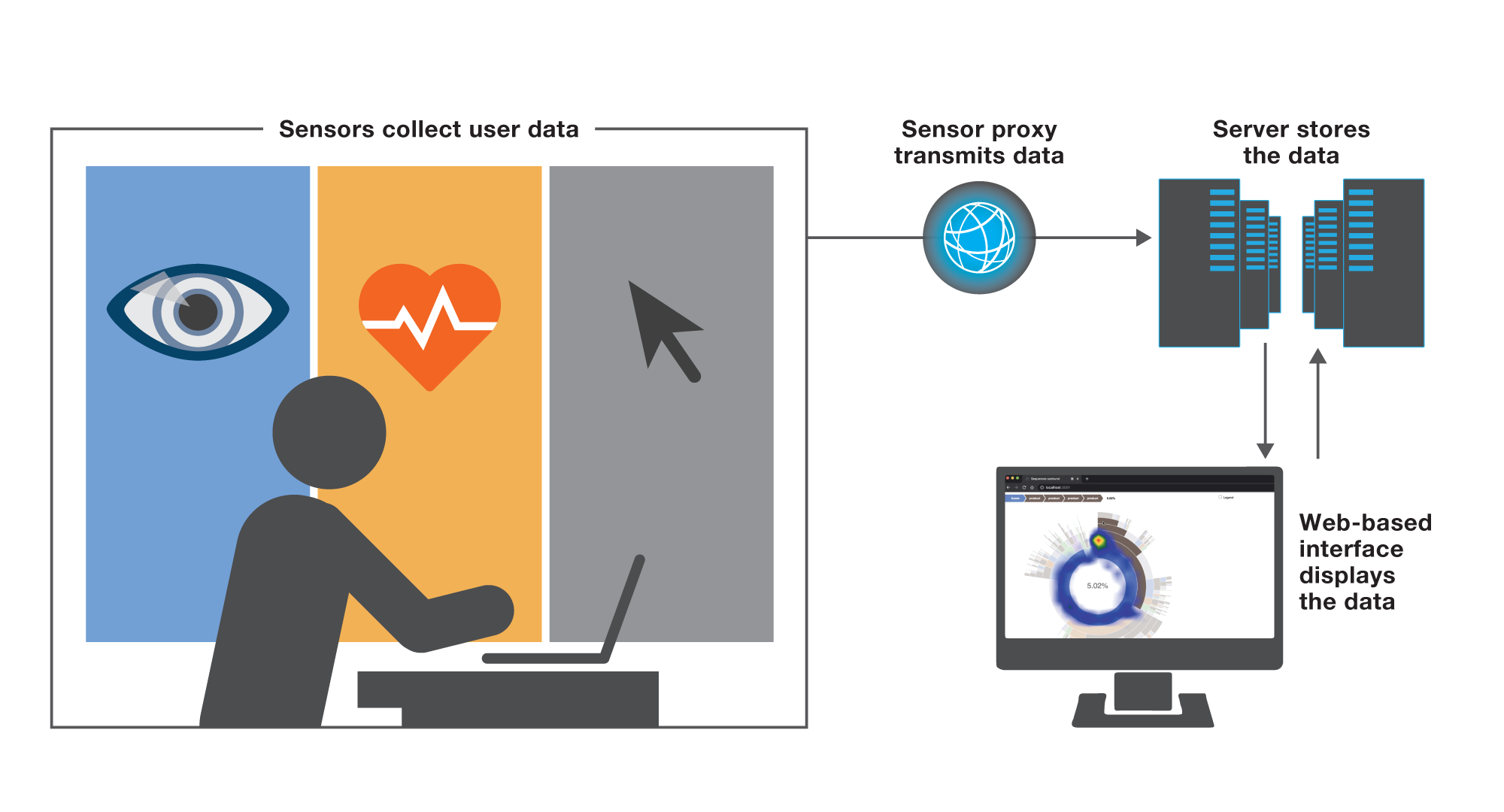

Behind the development of the Integrated Sensors Platform is the concept of quantifying how physiological factors, such as eye movement and heart rate, can illuminate how users are interacting with software tools. These factors that indicate users' cognitive states — for example, attention span, cognitive workload, stress and fatigue — provide a data-based look at how comfortable, stress-free, and intuitive the software is for the intended users. While other researchers have correlated single physio-behavioral cues, e.g., eye movements, with responses to a computer-based task, most of this work has involved relatively uncomplicated tasks like using a webpage or general-purpose software. Our vision for the Integrated Sensors Platform is to create a tool that provide assessments of user performance with applications for complex, demanding tasks faced by operators such as air traffic controllers, pilots, cyber analysts, and logistics managers. The platform's goal is ultimately to utilize a multitude of sensors from which disparate data are synthesized and analyzed to gain a broad physio-behavioral picture of user interaction with an application. We incorporated two commercial sensors into the current platform — a computer mouse with an optical heart rate monitor and an eye tracker — as well as a custom Lincoln Laboratory monitor that records user actions. To demonstrate the platform's practicality and effectiveness as an assessment tool for most application designers, we chose sensors that would not inhibit a user's normal movement, did not require long setup or calibration time, and were easily available.

Benefits

- Provides insight on application usability that complements anecdotal feedback and observations

- Yields information that could help assess users' workflow or cognitive fatigue during use of the application

- Works with open-source technology and low-cost commercial sensors to help reduce costs for developers

- Uses built-in integration and analysis capabilities that allow application designers with little or no background in data analysis to benefit from the tool

Additional Resources

More Information

H.X. Li et al., "Integrated Sensors Platform: Streamlining Quantitative Physiological Data Collection and Analysis," in Proceedings of the 2022 International Conference on Human-Computer Interaction, part of Lecture Notes in Computer Science book series, 16 June 2022.